Imagine you are enjoying a well-brewed cup of Gyokuro green tea from Japan while conducting typical Search Engine Optimization maintenance. Unexpectedly, you discover something that causes you to drop your perfectly brewed cup of green tea, to yell unholy words, to start sweating bullets despite the perfect 70-degree room. After further analysis you feel slight relief knowing that you caught this early and saved what could have been a website disaster.

A robots.txt file overwrite, disallowing all pages on the site (Disallow: /), surprisingly, may not negatively affect your organic performance. As you know, a robots.txt file is a text file at your website’s root directory which directs search engine robots/spiders how to crawl your site and what areas of the website they cannot index. One case study shows that this overwrite error did not cause a major drop in search engine rankings or website traffic. You may have thought that a Disallow: / would appear to be the end of the world, but with an organized SEO maintenance program, your site’s organic performance can be saved and you can continue enjoying your cup of Gyokuro green tea… or Sencha if you so desire.

Mini case study and setting

- Client: Client X

- Focal location: Global

- Average organic website traffic per month: ~400K (US, B2B)

- Timeframe: 11/22/2013 – 12/02/2013

- Search engine Google (Bing & Yahoo were >5% of the total traffic)

- Measurement: Organic website visits, Google organic ranking positions

Visual Timeline of the Event:

Friday, 11/22/2013: On a late Friday afternoon, Client X was conducting a server migration practice test. Presumably, everything went smoothly for the web development team, except for uploading an incorrect robots.txt file. The reaction from Client X on Monday indicated that the team was not aware of the accidental overwrite.

Sunday, 11/24/2013: As you have already read, the overwritten file that was discovered on Sunday evening, read as follows:

User-agent: *

Disallow: /

Disallow: / tells the compliant search engines to disallow everything following www.domain.com/ (i.e. all pages on your website).

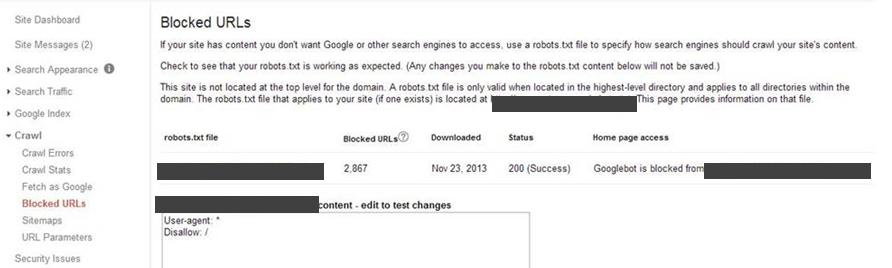

When I investigated whether the search engines have seen this overwrite, the Google Webmaster Tools account read:

This means that Google not only crawled the new robots.txt file, but followed its orders and was blocked from crawling all site pages. I then decided to check how many pages were de-indexed and found this from Google:

However, I only found about three important pages that were de-indexed while almost all others were still indexed with a cached date of a week prior. I then did manual ranking checks and found that the ranking positions did not drop for the pages that were still indexed.

Monday, 11/25/2013: After the errant robots.txt with bad Disallow directive was reported, Client X was able to amend the error and upload a robots.txt file with correct directives early Monday morning. We tried to fetch the URL and re-submit the sitemap in Google Webmaster Tools. Since the robots.txt file lived at www.domain.com/robots.txt, after we tried submitting the URL, Google continued to give this response: “Google is blocked from www.domain.com.” Therefore, we could only nervously wait while monitoring the site’s overall performance.

Tuesday, 11/26/ 2013: Around midnight on Tuesday, the robots.txt file was re-crawled by Google. After running a ranking report, the average positions resulted in a slight decrease from Monday. As we waited for more pages to be re-crawled, we detected this errant meta description in search results for a high-level keyword phrase used by Client X:

“A description for this result is not available because of this site’s robots.txt – learn more.”

Obviously concerned about the affect this would have on click through rate, I luckily discovered that with a swift mitigation, the CTR was not greatly impacted. Soon after, Google re-crawled this page and displayed the correct meta description in the SERPs.

Monday, 12/2/2013: Monday was time to run another ranking report and compare a week’s worth of traffic numbers. The results were surprising.

Results:

Average ranking positions of the top 300 keywords:

| Previous 11/7/2013 | 11/25/2013 | Baseline Change 1 | 11/26/2013 | Baseline Change 2 | 12/2/2013 | Baseline Change 3 | |

| AVERAGE RANKING POSITION: | 16.44 | 15.16 | +1.29 | 15.49 | +0.95 | 15.17 | +1.27 |

Yes, after one week, the ranking positions actually increased from the baseline positions! This does not mean that maybe one should attempt blocking crawlers via your robots.txt file in hopes that your ranking positions could increase. Client X’s ranking positions may have increased even higher if it wasn’t due to the incorrect Disallow: / directive. Additionally, imagine the impact this would have had on a large website like Amazon which Google crawls every few minutes instead of every 24 hours. The sheer volume of page traffic and revenue that could have been lost without SEO monitoring via maintenance would have been in the millions within days.

Why SEO Maintenance and Monitoring Works

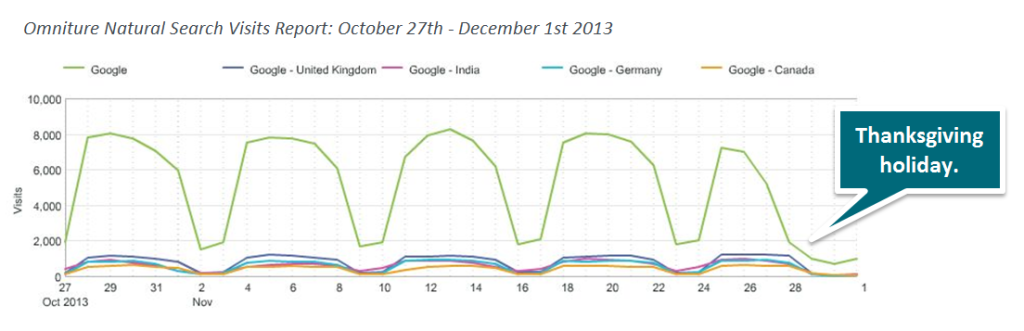

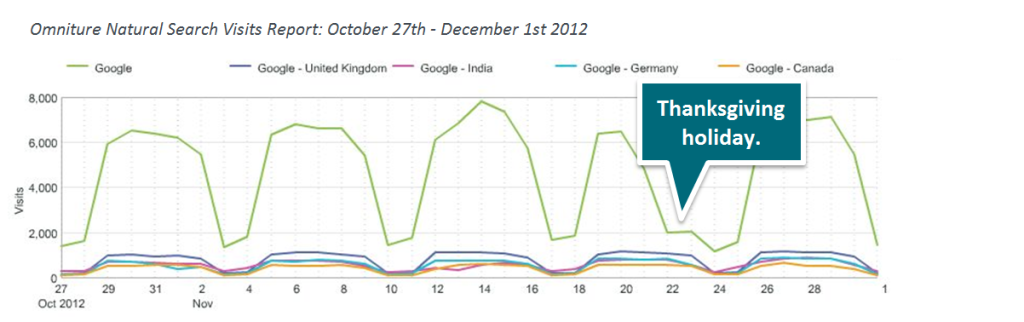

As you can see, the traffic during the Thanksgiving week in 2013 was higher than 2012. There were no significant traffic decreases to report.

Because Client X has a SEO maintenance program that enabled Covario to swiftly rectify the error, there was no significant drop in performance.

Actionable Insights and Key Takeaways:

- Investment in SEO site monitoring & maintenance is essential for any company focusing on sustaining and/or improving their search engine visibility.

- Always notify your SEO team before conducting any page refreshes, site migrations, or server relocations for monitoring.

- Set up automatic notifications when the robots.txt file is altered to decrease the risk that crawlers will discontinue all site indexing.