Technical SEO is one of the most complicated subjects, though it can make or break your SEO program. If you do not have a website development background, these topics may be difficult to understand. Before we get into technical SEO best practices, it is important to understand basic HTML website code.

In summary, a website is coded with HTML (Hyper Text Markup Language) which is composed of tag names surrounded by angle brackets (e.g. “<html></html>”). If you want to see what this looks like, right click on this web page and select “View Source” (for PC users). Web Browsers, such as Internet Explorer, Chrome, or Firefox are used to display HTML code into visual content.

As mentioned, the technical side of your website is a very important organic ranking factor, some would say about 20%. If we can break it down into the 4 most important areas, it would be as follows:

- SEO-friendliness of your website code

- Crawl-ability of your content

- Architecture of your site and URL structure

- Server-side redirect codes

If this already confused you, don’t worry, it is difficult for everyone. Below highlights technical SEO best practices, common issues, and recommendations for improvement. These recommendations can be relayed to a website developer who will be able to understand the issues and fix the problems.

HTML Code & Design Best Practices

Utilize HTML5: Currently HTML5 markup language is one of the most SEO-friendly codes used to date. Though this may change in the future. It supports the latest multimedia and is used consistently across different platforms. It is suggested to code your website utilizing HTML5 markup language.

Responsive Design: Google recommends using responsive design. Do not create a separate mobile website with different URLs. Responsive web design is an approach to web design aimed at crafting sites to provide an optimal viewing and interaction experience across a wide range of devices (from desktop computer monitors to mobile phones). This will most likely make your website mobile-friendly which is now an organic ranking factor. If you are unsure whether you site is mobile-friendly, use Google’s Mobile-Friendly Tool here.

Avoid Using AJAX, Flash, Frames & iframes: Avoid using any of these codes. There are ways to make these codes SEO-friendly, but it is very complicated and you will need to hire a knowledgeable expert to make sure it is implemented properly.

Page Speed: Page speed measures how fast a webpage loads, which affects organic rankings and usability. Utilize Google’s Page Speed Insights tool to see what type of score your get. This tool will tell you exactly how to improve. You can copy and paste the recommendations then send it to your web developer. If you receive a score that is yellow or green, for desktop and mobile, you are probably ok. If you are in the red, especially for mobile phones, you may need to improve the site’s page speed.

URL, Hierarchy, and Navigation Best Practices

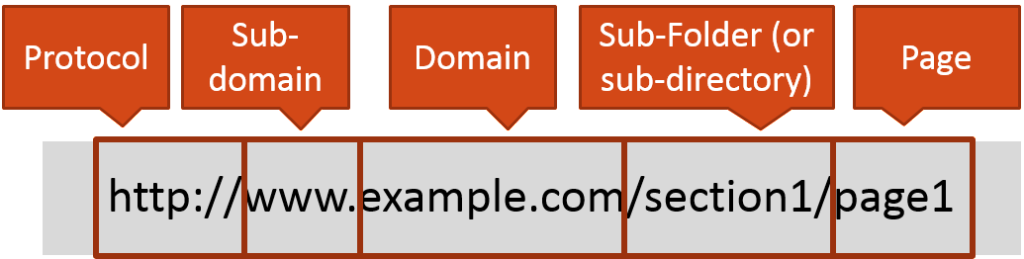

First, let’s discuss a the structure of a URL before we get into best practices. A URL (Uniform Resource Locator) is used to specify web pages or addresses on the World Wide Web. URLs have the following format: protocol://hostname/other-information. See the image below for a more detailed breakdown.

Avoid Duplicate URLs: Search engines see slight changes to URLs as unique pages. Remember, they are machines, not people. For example if the same page loads by adding a slash to the end of the URL and removing the slash, they are seen as two separate pages to Google. Below is a list of URLs that are actually seen as duplicate pages. Having duplicate content will negatively affect your organic rankings. Furthermore, having duplicate pages will dilute link equity. For example, if there are 2 bloggers that want to link to your content (loaded at all the URLs below) and one of them links to #1 and the other links to #2, Google will not combine these links into one page.

All are seen as different pages to search engines:

- http://www.example.com/page1

- http://www.example.com/page1/

- http://www.example.com/Page1

- https://www.example.com/page1

- http://example.com/page1

Solution: Tell your web developer this – Normalize all URLs: Implement a URL rewrite code using a 301 redirect to make sure all URLs serve at the preferred structure:

- Lower case

- Slash or no slash

- http or https

- www or non-www

Add Canonical Tags When Appropriate: Another option for dealing with duplicate content is to utilize the rel=canonical HTML attribute. The rel=canonical attribute passes the same amount of link juice (ranking power) as a 301 redirect, and often takes much less development time to implement. The tag is part of the HTML head of a web page. Example: “<link rel=”canonical” href=”http://www.example.com/page1/” />.”

Whatever URL you put as the canonical, search engines will only see that canonical URL. Please be careful in using this HTML attribute as it can cause further issues if implemented improperly.

Use Natural Language in URLs: Use natural language in URLs instead of dynamic URLs with numbers and symbols. Example: /cheese-bars instead of /?hastag=1&s=123&cat=13… This helps your site become more SEO-friendly. Plus you can insert keywords into the URLs. Most websites will automatically use natural language. If your website does not, create URL rewrite codes using 301 redirects.

Have a Secured Site (HTTPS): Security is a top priority for Google. Since Google is working to make the internet a safer place, on August 6, 2014 they announced that a secured site (HTTPS) is a positive ranking signal. Furthermore, on December 17, 2015 Google announced that they will look for https even if you have no signals pointing to those URLs. If you are updating or creating a website, it is recommended to host the main website at https, buy a secured certificate, and keep it up-to-date. Make sure your canonical tags and links are correct, pointing to https. If you want to implement this for your current site, only do this for more advanced SEO programs and implement a URL rewrite code using a 301 redirect from http to https.

Error Codes and Redirect Best Practices

404 or 410 Errors: The HTTP 404 Not Found Error (or 410 Gone) means that the webpage you were trying to reach could not be found. The page has most likely been removed or the URL has changed. 404s may negatively affect your organic rankings. It does not pass any link equity or online authority from the old page to a new page. It is recommended to create a 301 redirect from the old page to the new page when appropriate, which will pass link authority.

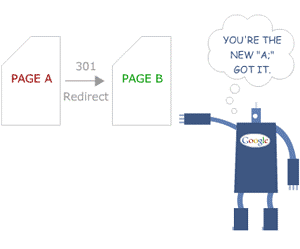

Redirects: A 301 redirect is a permanent redirect which passes between 90-99% of link juice (ranking power) to the redirected page. 301 refers to the HTTP status code for this type of redirect. In most instances, the 301 redirect is the best method for implementing redirects on a website. Therefore, avoid using a 302 or 307 Temporary Redirect and only use this if you will be publishing the page again in the next few weeks.

Furthermore, when implementing a 301 redirect, try to implement a one-to-one redirect. Google will follow redirects up to 4 or 5 times, but may not pass the same authority in each redirect hop.

Avoid Using Other Types of Redirects: Avoid using the following types redirects as they will not pass link equity or “link juice.”

- Meta Refresh Redirect: An example of a refresh value is: <meta http-equiv=”refresh” content=”value”> This refresh-tag will redirect you to another webpage automatically or on page-load. Search engines typically do not see this and does not pass “link juice.”

- JavaScript Redirect: Another type of redirect utilizing JavaScript code. Google can execute JavaScript code (if not blocked by robots.txt or meta robots tag). However, it may not transfer “link juice.”

Pages for Search Engines

Make sure you have the following pages or correct coding on your website. These pages help search engines find and crawl the content you want throughout your website.

XML Sitemap: A XML sitemap is a document that helps Google and other major search engines crawl your web pages and content. Most websites already have a sitemap. It is recommended to host this page at /sitemap.xml (or a .gz file), though you can name this file something different. Make sure to submit this sitemap to Google within Google Search Console.

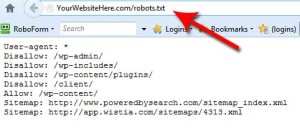

Robots.txt: The robots.txt is a text file webmasters create to instruct robots (typically search engine robots) how to crawl and index pages on their website. Most websites will already have this. You must name this file exactly “/robots.txt” and host it at your domain (not within a sub-folder). Submit this file to Google Search Console.

Furthermore, it is recommended to allow search engines to crawl your JavaScript and CSS files. Only disallow sensitive or private information. This file is an incredibly important file and can cause major errors if not implemented properly. Feel free to read more about this- I have compiled a case study on the affects of a robots.txt disallow: /.

Robots Meta Tag: A meta robots tag, placed in the head section of your HTML page, is an alternative approach to disallowing a page in a robots.txt file. Example: <meta name=“robots” content=“noindex,nofollow” />. This means that Google will not index the page or follow any links from the page. Only use this for sensitive or private information. If you are considering to use this for duplicate content, please use a canonical tag approach instead.

Summary

In summary, these are very basic technical SEO best practices. You may need to work with a web developer and a SEO expert to really make sure your website is SEO-friendly. However, this gives you an overview so that anyone making updates to your website is aware and will not cause major issues.

I encourage you to double check whether you have any of the technical SEO issues listed above. If so, please implement my recommendations. If you have further questions, feel free to contact me directly.